Imagine Internships

Gesture Controlers from Movies

Advisors

Rémi Ronfard, IMAGINE teamContact : remi.ronfard@inria.fr (04 76 61 53 03)

Context

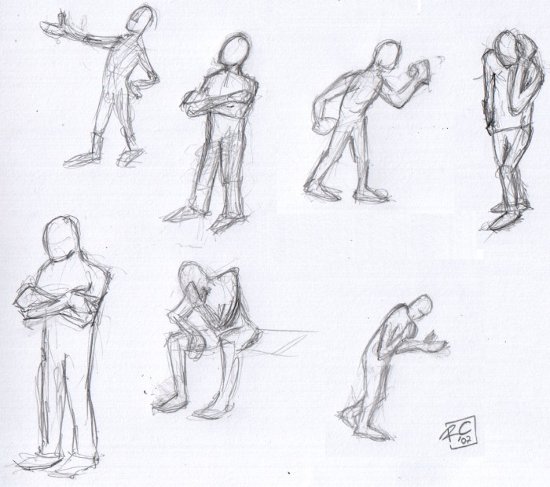

Generating expressive body language for virtual actors is a difficult task in computer animation.

Recently, Levine et al. have proposed "gesture controllers" which are Markov decision processes trained from examples to coordinate the gestures of a virtual actor with an input speech signal. Levine et al. used a training set of motion capture data.

In this Masters, we would like to train similar models from real actors in real movies.

Objectives

Gesture controllers, as described by Levine et al. respond to speech by computing a mapping from speech prosody features to kinematic features. In this Master's thesis , we propose to learn such as mapping directly from audio and video recordings of real actors in real movie scenes.

Kinematic features can be extracted from the video tracks of the movie using a combination of actor detection and tracking, feature point detection and tracking, spatio-temporal interest point extraction, and histograms of oriented gradients and flows. Such features have proved useful in characterizing and recognizing full body action from video [2,3,4]. Here, we will use them to characterize the visual appearance of real actor movements and virtual actor movements in a similar framework, based on global image measurement, not requiring knowledge of the details of the actor's anatomy.

Thus we propose to build a mapping from prosody features [[5] to kinematic features computed from the video.

Following Weinland et al, we will tack the actors and segment their trajectories into primitive gestures by detecting the minima of screen motion over time [2,3]. This will allow to build a statistical mapping between prosody and kinetic features, not requiring an estimation of body pose or movement.

Instead, we will use the mapping to choose gestures of the virtual actor in its own, private library of gestures, so that it best mimics the kinematic features predicted by the input speech signal.

To demonstrate the proposed approach, the candidate will extract prosody and kinematic features from a sample of movie scenes available in the IMAGINE team. Then, he will train a conditional random field (CRF) that maps kinematic features to prosody features for each actor in the training set. Finally, he will used a library of (keyframe-based) gestures for a simplified, rigged virtual actor to generate movements that best map an arbitrary input speech signal to 3D animation.

This work is expected to lead to a PhD thesis that will extrapolate the results to full body actions reacting to other input signals than speech, and for generating coordinated movement of multiple actors, as part of our research program on narrative environments for digital storytelling.

Keywords

Virtual actors, gesture controller, Markov decision processes.References

[1] Sergey Levine, Philipp Krahenbuhl, Sebastian Thrun, Vladlen Koltun. Gesture Controllers. ACM SIGGRAPH 2010.[2] Daniel Weinland, Edmond Boyer, Remi Ronfard. Action Recognition from Arbitrary Views using 3D Exemplars. IEEE International Conference on Computer Vision (ICCV) - 2007.

[3] Daniel Weinland, Remi Ronfard, Edmond Boyer. Automatic Discovery of Action Taxonomies from Multiple Views. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) - 2006.

[4] Daniel Weinland, Remi Ronfard, Edmond Boyer: A survey of vision-based methods for action representation, segmentation and recognition. Computer Vision and Image Understanding 115(2): 224-241 (2011).

[5] Couper-Kuhlen, Elizabeth and Cecilia E. Ford (eds.) Sound Patterns in Interaction: Cross-linguistic studies from conversation. Typological Studies in Language. 2004.